Our eyes extract a lot of information from visible light that enables us to see color, movement, shadows, highlights, shapes, and more, with each component processed separately and sent to the brain in parallel to the others. It was previously thought that the same scene would always be converted into the same pattern of activity. But research by scientists at the University of Tübingen in Germany and the University of Manchester in the UK suggests that the signals differ wildly as the brightness of the environment changes by even small amounts.

When brightness changes, what you see remains the same – or close to it – but how the nerve impulses in your visual system respond is fundamentally different. "Imagine you go skiing and enjoy the panoramic view of the mountains, once with and once without wearing sunglasses," says co-author Katja Reinhard. "In these two conditions the information channels of the retina will send very different signals to the brain. The language of the retina has changed simply because of the sunglasses, even though you are looking at the same scene.”

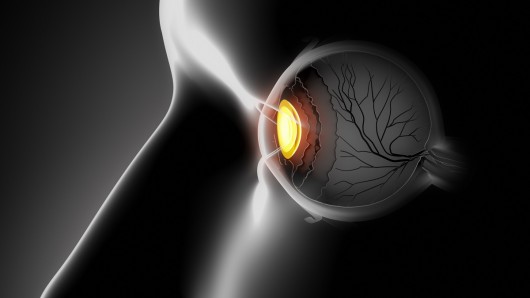

Part of the difference appears to be due to the activation or deactivation of rods and cones in the retina as you switch between scotopic (dim light), photopic (bright light), and mesopic (a mixture of the two) vision. But the study found that many ganglion cells, which are responsible for transmitting visual information to the brain, exhibited different response patterns as light transitioned within the scotopic range, which suggests that there's more to the story.

The researchers observed that most ganglion cells reliably responded to specific luminance, even when such trials were bookended by exposure to higher or lower light levels. This suggests that the neural pattern generated by the retinal ganglion cells is luminance dependent, and it poses new questions as to how the brain decodes this information to allow us to see.

In particular, the researchers note these results give cause to ask how the brain deals with the changes in retinal output to still recognize the same image, and also whether the altered patterns carry important information or are rather filtered out and discarded.

They don't have enough data to reach any conclusions on this or the broader question of how the retina encodes the visual information in the first place, but they hope that future research will fill in some of the gaps. A better understanding of the process wouldn't just benefit our understanding of how people and other mammals see, but could also help improve digital cameras, visual prostheses, and other vision-related technical equipment.

A paper describing the research was published in the journal Nature Neuroscience.

Previous page

Previous page Back to top

Back to top