Computers aren't best suited to visual object recognition. Our brains are hardwired to quickly see and match patterns in everything, with great leaps of intuition, while the processing center of a computer is more akin to a very powerful calculator. But that hasn't stopped neuroscientists and computer scientists from trying over the past 40 years to design computer networks that mimic our visual skills. Recent advances in computing power and deep learning algorithms have accelerated that process to the point where a group of MIT neuroscientists has found a network design that compares favorably to the brain of our primate cousins.

This is important beyond the needs of automated digital information processing like Google's image search. Computer-based neural networks that work like the human brain will further our understanding of how the brain works, and any attempts to create them will test that understanding. Essentially, the fact that these networks work to a level comparable to primates suggests that neuroscientists now have a solid grasp of how object recognition works in the brain.

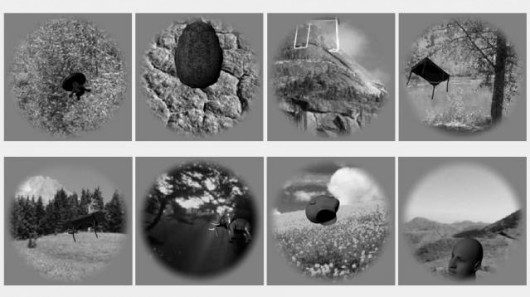

To see how current networks hold up, the MIT scientists started by testing primates. They implanted arrays of electrodes in the inferior temporal (IT) cortex and area V4 (a part of the visual system that feeds into the IT cortex) of the primates' brains. This allowed them to see how neurons related to object recognition responded when the animals looked at various objects in 1,960 images. (The viewing time per image was a mere 100 milliseconds, which is long enough for humans to recognize an object.)

They then compared these results with those of the latest deep neural networks. These networks produce arrays of numbers when fed an image – different numbers for different images. If it groups similar objects into similar clusters in this number matrix representation, it's deemed accurate.

"Through each of these computational transformations, through each of these layers of networks, certain objects or images get closer together, while others get further apart," explains lead author Charles Cadieu.

The best network, developed by researchers at New York University, classified objects as well as the macaque (a medium-sized Old World monkey) brain.

That's the good news. The bad is that they don't know why. Neural networks are learning from massive datasets containing millions or billions of images, churning through the information with help from the high-performance graphical processing units that power the latest video games. But nobody knows quite what is going on in there as the networks refine their own algorithms.

Now that we know the latest deep neural networks perform comparably to primates at object recognition, though, Cadieu suggests other scientists will put more effort into picking apart the process to understand how computer systems that begin with nothing more than a learning algorithm arrive at this level.

The MIT researchers now plan to develop deep neural networks that mimic other visual processing systems, such as motion tracking and recognizing three-dimensional forms.

A paper describing the research was published in the journal PLoS Computational Biology.

Previous page

Previous page Back to top

Back to top