Researchers have developed a

technology that can remotely control an animal's movement with human thought.

In the 2009 blockbuster "Avatar," a human remotely controls the body of an alien. It does so by injecting human intelligence into a remotely located, biological body. Although still in the realm of science fiction, researchers are nevertheless developing so-called 'brain-computer interfaces' (BCIs) following recent advances in electronics and computing. These technologies can 'read' and use human thought to control machines, for example, humanoid robots.

New research has demonstrated the possibility of combining a BCI with a device that transmits information from a computer to a brain, or a so-called 'computer-to-brain interface' (CBI). The combination of these devices could be used to establish a functional link between the brains of different species. Now, researchers from the Korea Advanced Institute of Science and Technology (KAIST) have developed a human-turtle interaction system in which a signal originating from a human brain can affect where a turtle moves.

Unlike previous research that has tried to control animal movement by applying invasive methods, most notably in insects, KAIST researchers propose a conceptual system that can guide an animal's moving path by controlling its instinctive escape behaviour. They chose the turtle because of its cognitive abilities as well as its ability to distinguish different wavelengths of light. Specifically, turtles can recognize a white light source as an open space and so move toward it. They also show specific avoidance behaviour to things that might obstruct their view. Turtles also move toward and away from obstacles in their environment in a predictable manner. It was this instinctive, predictable behaviour that the researchers induced using the BCI.

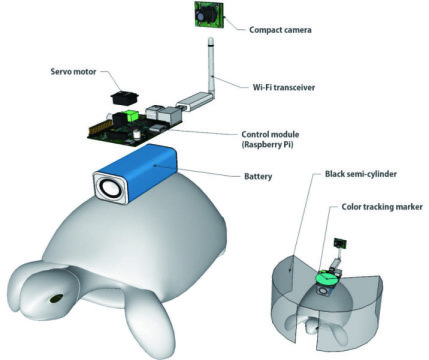

The entire human-turtle setup is as follows: A head-mounted display (HMD) is combined with a BCI to immerse the human user in the turtle's environment. The human operator wears the BCI-HMD system, while the turtle has a 'cyborg system' -- consisting of a camera, a Wi-Fi transceiver, a computer control module and a battery -- all mounted on the turtle's upper shell. Also included on the turtle's shell is a black semi-cylinder with a slit, which forms the 'stimulation device'. This can be turned ±36 degrees via the BCI.

The entire process works like this: the human operator receives images from the camera mounted on the turtle. These real-time video images allow the human operator to decide where the turtle should move. The human provides thought commands that are recognized by the wearable BCI system as electroencephalography (EEG) signals. The BCI can distinguish between three mental states: left, right and idle. The left and right commands activate the turtle's stimulation device via Wi-Fi, turning it so that it obstructs the turtle's view. This invokes its natural instinct to move toward light and change its direction. Finally, the human acquires updated visual feedback from the camera mounted on the shell and in this way continues to remotely navigate the turtle's trajectory.

The research demonstrates that the animal guiding scheme via BCI can be used in a variety of environments with turtles moving indoors and outdoors on many different surfaces, like gravel and grass, and tackling a range of obstacles, such as shallow water and trees. This technology could be developed to integrate positioning systems and improved augmented and virtual reality techniques, enabling various applications, including devices for military reconnaissance and surveillance.

Previous page

Previous page Back to top

Back to top